Countersniper System

NEST | Countersniper | RIPS | Timesync | Documents | Download

Recent related news:

Our team has a two-decade long history of research on acoustic shot detection and localization systems. Our most significant contributions to the field include the following papers:

- wireless sensor network-based countersniper system in 2003

- multiple simultaneous shot resolution algorithm in 2004

- shockwave length-based weapon classification approach in 2006

- mobile phone-based countersniper system in 2011

- shockwave length-based trajectory estimation algorithm in 2011

- animal-borne anti-poaching system in 2019

Short YouTube videos of various demonstrations are below:

- demo showing the single-channel system in action at Ft. Benning in 2003

- demo showing the system localizing four near-simultanous shooters at Ft. Benning in 2004

- demo showing the same system with fully automatic fire

- demo of the multi-channel system in a private test facility in 2009

The majority of countersniper systems are based on acoustic measurements. The two distinctive observable acoustic events are the muzzle blast and the acoustic shock wave, the sonic boom produced by a supersonic projectile. The main limiting factor in standlone systems is the requirement for line of sight, which is a major impediment in urban environment. In fact, the performance of most of the current acoustic systems significantly degrades when used in the concrete jungle, since some of the few available sensor readings are typically corrupted by multipath effects.

A wireless sensor network-based approach can eliminate this problem. Instead of using a few expensive acoustic sensors, a low-cost ad-hoc acoustic sensor network measures both the muzzle blast and shock wave to accurately determine the location of the shooter and the trajectory of the bullet. The basic idea is simple: using the arrival times of the acoustic events at different sensor locations, the shooter position can be accurately calculated using the speed of sound and the location of the sensors.

Our first system developed in 2004 was based on many low-cost single-microphone sensor nodes. Whenever an event was detected, the time of arrival was measured by multiple sensors and the information is propagated to the base station through the network using a specially tailored data aggregation, time conversion and routing service. The novel sensor fusion approach running on the base station (for example, a laptop) was able to eliminate observations due to echoes, resolve multiple simultaneous shots and locate the shooter with unprecedented accuracy.

The system was demonstrated and evaluated in various US Army test facilities providing a realistic urban environment. For example, using 60 sensors in a 50x100 meter area, the accuracy of the system was better than 1 meter on average in 3D, that is it was able to determine the exact window a particular shot was taken from. The latency of the system was better than 2 seconds.

View the video of our very first live demonstration:

The system can also distinguish multiple shots and localize them accurately:

Multi-Channel System

The disadvantage of our first approach is its static nature and the need for many sensors. Hence, we developed a soldier-wearable version that required only a handful of sensors. To compensate for the small number of sensors, each sensor was equipped with four microphones forming a tiny microphone array. As such, a single sensor alone was able to locate the shooter. Multiple sensor nodes still cooperated to provide much better accuracy and robustness as well as trajectory estimation and weapon classification.

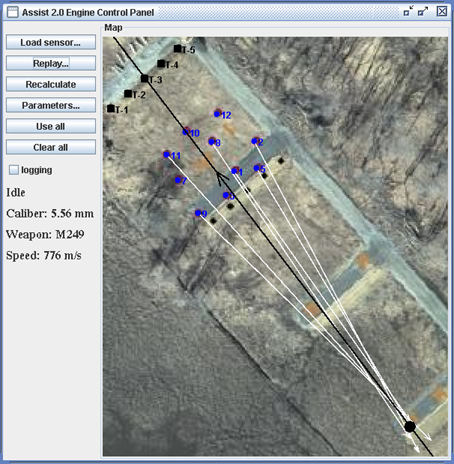

The following picture shows a 100 m shot localized by the network (trajectory: black, shooter location: black dot). The weapon and caliber is also shown on the left. The white arrows indicate that 6 out of the 10 sensors were able to localize the shooter alone, that is, they relied only on their own detections using their microphones with 10 cm separation.

More details can be found in our Mobisys 2007 paper.

Mobile Phone-based System

The motivation behind the mobile phone-based approach is simply the fact that most people including soldiers already carry smartphones. So why not utilize it? While the entire approach could be implemented on phones since they already have a microphone, a GPS, WIFI or 3G networking, a display, and enough computing power to carry out the sensor fusion, it would not be too accurate due to various reasons and continuous sampling of the microphone would drain the battery fast. Instead, we developed a custom Bluetooth headset that doubles as a single-channel sensor node. It only needs to utilize that phone, when there was a shot detected.

The challenge of course is the fact that we now only have a handful of sensors and they only have a single microphone each. Therefore, we developed a new sensor fusion approach that utilizes the shockwave length for miss distance and trajectory estimation. The time of arrival of the muzzle blast and the shockwave, in turn, make it possible to localize the shooter and classify the weapon used. This works surprisingly well as described in our Sensys 2011 paper.

Sponsors

We gratefully acknowledge the generous sponsorship of DARPA, ONR, ARL, and Databouy Inc. that made our research possible.

Contacts

For more information contact Akos Ledeczi